Recently, the first-ever image of a black hole was splashed across front pages and filled up news feeds around the world. The image was made in part thanks to tremendous computing power that analyzed millions of gigabytes of data that had been collected from space.

Research that uses computer algorithms to create pictures from massive volumes of data is also going on at Memorial Sloan Kettering. Instead of probing the outer limits of the universe, this work seeks new ways to see what’s going on inside our bodies.

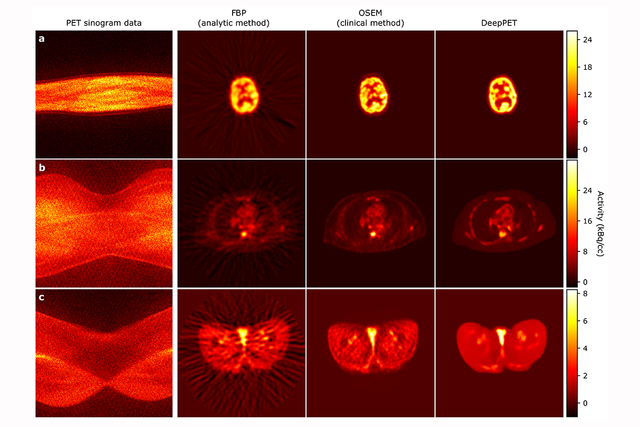

In a paper published in the May 2019 issue of Medical Image Analysis, MSK investigators led by medical physics researcher Ida Häggström report the details of a new method they developed for PET imaging. The system generates images more than 100 times faster than conventional techniques. The images are also of higher quality.

“Using deep learning, we trained our convolutional neural network to transform raw PET data into images,” Dr. Häggström says. “No one has done PET imaging in this way before.” Convolutional neural networks are computer systems that try to mimic how people see and learn what the important shapes and features in images are.

Deep learning is a type of artificial intelligence. In this technique, a computer system learns to recognize features in the training data and apply that knowledge to new, unseen data. This allows the system to solve tasks, such as classifying cancerous lesions, predicting treatment outcomes, or interpreting medical charts. The MSK researchers, including medical physicist Ross Schmidtlein and data scientist Thomas Fuchs, the study’s senior author, named their new technique DeepPET.

Peering into the Body’s Inner Workings

PET, short for positron-emission tomography, is one of several imaging technologies that have changed the diagnosis and treatment of cancer, as well as other diseases, over the past few decades. Other imaging technologies, such as CT and MRI, generate pictures of anatomical structures in the body. PET, on the other hand, allows doctors to see functional activity in cells.

The ability to see this activity is especially important for studying tumors, which tend to have dynamic metabolisms. PET uses biologically active molecules called tracers that can be detected by the PET scanner. Depending on which tracers are used, PET can image the uptake of glucose or cell growth in tissues, among other phenomena. Revealing this activity can help doctors distinguish between a rapidly growing tumor and a benign mass of cells.

PET is often used along with CT or MRI. The combination provides comprehensive information about a tumor’s location as well as its metabolic activity. Dr. Häggström says that if DeepPET can be developed for clinical use, it also could be combined with these other methods.

Improving on an Important Technique

There are drawbacks to PET as it’s currently performed. Processing the data and creating images can take a long time. Additionally, the images are not always clear. The researchers wanted to look for a better approach.

The team began by training the computer network using large amounts of PET data, along with the associated images. “We wanted the computer to learn how to use data to construct an image,” Dr. Häggström notes. The training used simulated scans of data that looked like images that may have come from a human body but were artificial.

The images from the new system were not only generated much faster than with current PET technologies but they were clearer as well.

Conventionally, PET images are generated through a repeating process where the current image estimate is gradually updated to match the measured data. In DeepPET, where the system has learned the PET scanner’s physical and statistical characteristics as well as how typical PET images look, no repeats are required. The image is generated by a single, fast computation.

Dr. Häggström’s team is currently getting the system ready for clinical testing. She notes that MSK is the ideal place to do this kind of research. “MSK has clinical data that we can use to test this system. We also have expert radiologists who can look at these images and interpret what they mean for a diagnosis.

“By combining that expertise with the state-of-the-art computational resources that are available here, we have a great opportunity to have a direct clinical impact,” she adds. “The gain we’ve seen in reconstruction speed and image quality should lead to more efficient image evaluation and more reliable diagnoses and treatment decisions, ultimately leading to improved care for our patients.”